GDPR and Facial Recognition Technology: A Balancing Act between Privacy and Innovation

This article provides a comprehensive analysis of the complex relationship between the European Union's General Data Protection Regulation (GDPR) and the rapidly advancing field of Facial Recognition Technology (FRT).

Staff

This article provides a comprehensive analysis of the complex relationship between the European Union's General Data Protection Regulation (GDPR) and the rapidly advancing field of Facial Recognition Technology (FRT). While FRT presents transformative benefits across public security, healthcare, retail, and personal convenience, its core operational model—the large-scale collection, processing, and storage of unique and immutable biometric identifiers—creates a fundamental tension with the GDPR's foundational principles. The regulation's emphasis on data minimisation, purpose limitation, and the stringent protection of special category data establishes a high legal threshold for any FRT deployment.

Key legal hurdles for organizations seeking to use FRT include establishing a lawful basis for processing under both Article 6 and the more demanding Article 9 of the GDPR. The gold standard of "explicit consent" is often impractical or legally invalid in many common FRT scenarios, such as public surveillance or employer-employee relationships. This forces reliance on narrower, more complex justifications like "substantial public interest," which require a robust and specific legal framework that has often been found lacking in practice. Furthermore, the mandatory requirement to conduct a thorough Data Protection Impact Assessment (DPIA) is not a mere formality but a substantive legal obligation, the failure of which has been a key factor in regulatory enforcement actions.

Beyond legal compliance, FRT amplifies significant societal risks, including the erosion of anonymity through mass surveillance, the perpetuation and magnification of societal biases through flawed algorithms, and acute data security vulnerabilities given the permanent nature of compromised biometric data. This report examines the clear and consistent direction from European regulators, such as the European Data Protection Board (EDPB), and national Data Protection Authorities (DPAs), which have levied substantial fines and issued prohibitive orders against non-compliant uses of FRT. The analysis concludes with a set of actionable recommendations for policymakers, technology developers, and data controllers, advocating for a path forward that embeds "Privacy by Design" into the core of technological innovation and ensures a rights-respecting balance between progress and protection.

The Regulatory Foundation: GDPR and Biometric Data

1.1 Defining the Data: From Image to "Personal Data"

The scope of the General Data Protection Regulation is triggered by the processing of "personal data." Under Article 4(1) of the GDPR, this is defined as any information relating to an identified or identifiable natural person, referred to as the 'data subject'. A facial image, whether a static photograph or a frame from a video feed, unequivocally meets this definition. An individual can be identified directly from their facial image or indirectly by combining it with other information.

The technical process of FRT reinforces this classification. The technology does not simply view an image; it performs a series of operations to create a unique digital representation of a face. This "facial signature," "faceprint," or "biometric template" is a numerical vector or mathematical formula derived from the unique geometry of an individual's facial features. Although this template is not a visual image itself, it is intrinsically linked to an identifiable person and is used precisely to single them out from others. Therefore, this template is also considered personal data under the GDPR. The regulation's definition of "processing" is broad, encompassing any operation performed on personal data, including collection, recording, structuring, and storage. Notably, this includes even transient processing, where a biometric template may exist for only a fraction of a second before being compared and deleted. This brief existence is still considered processing and falls within the GDPR's purview.

1.2 The Special Category Threshold: The Significance of "Unique Identification"

The GDPR provides a higher level of protection for certain types of data deemed particularly sensitive, known as "special categories of personal data." Article 9(1) lists these categories, which include data revealing racial or ethnic origin, political opinions, and health data. Included in this list is "biometric data for the purpose of uniquely identifying a natural person".

This phrasing creates a critical legal gateway. Article 4(14) defines "biometric data" broadly as personal data resulting from specific technical processing of physical, physiological, or behavioural characteristics that "allow or confirm the unique identification" of a person, such as facial images. However, it is the

purpose of the processing that determines whether this biometric data becomes special category data under Article 9. If the explicit goal is to uniquely identify an individual—for instance, to grant access to a secure area or to find a specific person in a crowd—then the processing falls under Article 9 and its strict prohibitions and conditions.

This distinction creates a potential legal battleground. A company could argue that its use of FRT is not for unique identification but for facial characterization—analyzing a face to estimate demographic attributes like age or gender for targeted advertising or analytics. In such a scenario, the data controller might assert that the processing is governed only by the less stringent requirements of Article 6, thereby avoiding the need for explicit consent or another Article 9 condition. This places a significant burden on supervisory authorities to scrutinize the stated purpose of an FRT system against its technical capabilities and the potential for "function creep," where a system initially designed for characterization is later repurposed for identification. The initial DPIA is therefore essential in cementing and limiting the processing purpose from the outset to prevent such circumvention.

1.3 Core Principles Under Pressure: An Inherent Conflict

The deployment of FRT, particularly at scale, creates an inherent conflict with several of the GDPR's core data protection principles as laid out in Article 5.

Data Minimisation (Article 5(1)(c)): This principle mandates that personal data collected must be "adequate, relevant and limited to what is necessary" for the specified purpose. Many FRT applications, especially live facial recognition (LFR) in public spaces, are fundamentally maximalist. To identify a small number of individuals on a watchlist, these systems capture and process the biometric data of every person who passes before the camera. This practice of collecting everyone's data to find a few is the antithesis of data minimisation, representing a structural, not merely incidental, violation of the principle. This suggests that without a radical redesign, such systems are irreconcilable with this core tenet of GDPR, a conclusion echoed in the EDPB's calls for a ban on such practices.

Purpose Limitation (Article 5(1)(b)): Data must be collected for "specified, explicit and legitimate purposes" and not be further processed in a manner that is incompatible with those purposes. The potential for function creep with FRT is immense. A system deployed for a narrow security purpose, such as identifying known shoplifters, could easily be repurposed for tracking employee productivity, analyzing customer shopping habits for marketing, or even social scoring. Each of these would likely constitute an incompatible secondary purpose, requiring a new and separate legal basis and transparent communication with data subjects.

Storage Limitation (Article 5(1)(e)): Personal data must be kept in a form which permits identification of data subjects for "no longer than is necessary" for the purposes for which the data are processed. The business model of companies like Clearview AI, which involves creating vast, permanent, and ever-expanding databases of facial templates, is in direct contravention of this principle. The EDPB has strongly reinforced this principle, advocating in its opinion on airport FRT for the shortest possible retention periods and models where data is deleted almost immediately after use.

Accountability (Article 5(2)): The data controller is not only responsible for complying with the GDPR but must also be able to demonstrate that compliance. This places a significant administrative and procedural burden on any organization deploying FRT. They must maintain meticulous records of their processing activities, conduct and document a thorough DPIA, and be prepared at any time to provide evidence to supervisory authorities justifying the lawfulness, necessity, and proportionality of their actions.

The Technology in Focus: Understanding Facial Recognition

2.1 From Pixels to Identity: The FRT Process

Facial recognition technology transforms a visual image into a verifiable identity through a multi-step computational process. This process, powered by artificial intelligence and computer vision, is consistent across most applications.

Step 1: Face Detection: The process begins with software that scans a digital image or video frame to detect and isolate human faces, distinguishing them from other objects in the background.

Step 2: Feature Extraction: Once a face is detected, the algorithm analyzes its unique geometry. It measures key facial landmarks, often called "nodal points," which include features like the distance between the eyes, the width of the nose, the contour of the jawline, and the shape of the cheekbones.

Step 3: Template Creation: These measurements are then converted into a unique numerical code, a mathematical vector, or a formula. This digital representation is known as a "faceprint" or "biometric template". Modern systems often use sophisticated deep learning algorithms called convolutional neural networks (CNNs) to create these highly detailed and distinctive templates.

Step 4: Matching: The newly generated template is compared against a database of pre-existing templates to find a match. This comparison can serve two primary functions:

Verification (1:1 Matching): This is a one-to-one comparison to confirm a person's claimed identity. The system compares the live template to a single, known template stored on file, such as when a user unlocks their smartphone with their face.

Identification (1:N Matching): This is a one-to-many comparison to determine if an unknown person is present in a database. The system compares the live template against a large gallery of stored templates to find potential matches, as when law enforcement searches for a suspect in a crowd. The system calculates a "similarity score" for each comparison; if the score exceeds a predefined threshold, it flags a potential match.

It is crucial to recognize that this matching process is probabilistic, not deterministic. FRT does not provide a definitive "yes" or "no" answer but rather a statistical likelihood of a match. This technical reality has profound legal consequences. A high-probability "match" from an FRT system is not factual proof of identity but an algorithmic suggestion. This necessitates robust human oversight to verify the result before any action is taken that could have a legal or similarly significant effect on an individual, such as an arrest or denial of service. Without this human intervention, such actions could constitute a violation of GDPR's Article 22, which places strict limits on decisions based solely on automated processing. The probabilistic nature of the technology legally mandates human review as a critical safeguard, not merely an operational best practice

2.2 The Spectrum of Innovation: Applications and Benefits

The applications of FRT are diverse and expanding, offering significant benefits in efficiency, security, and convenience across numerous sectors.

Public Security and Law Enforcement: FRT is a powerful tool for law enforcement agencies. It is used to generate investigative leads by matching suspect images from surveillance footage against mugshot databases, to identify victims of crime or accidents who are unable to identify themselves, and to find missing persons. Numerous case studies have demonstrated its effectiveness in solving cold cases, combating human trafficking, and even exonerating individuals who were wrongfully accused.

Healthcare: In the healthcare sector, FRT is being deployed for a range of innovative purposes. It can be used for patient identification at check-in to prevent medical errors and ensure the correct patient record is accessed. It is also used to monitor elderly or vulnerable patients for safety, for instance, in assisted living facilities. Cutting-edge applications even use FRT to assist in the diagnosis of rare genetic disorders by analyzing subtle patterns in facial morphology that may be invisible to the human eye.

Retail and Customer Experience: Retailers are adopting FRT to enhance the shopping experience. The technology can identify VIP or loyalty program members as they enter a store, allowing staff to provide personalized service and tailored promotions. It can also be used to analyze aggregate customer demographics and emotional responses to store layouts and products, providing valuable business insights. Furthermore, FRT enables frictionless, contactless payment systems, streamlining the checkout process.

Access Control and Personal Devices: One of the most widespread and accepted uses of FRT is for security and convenience. It provides a seamless method for unlocking personal devices like smartphones and laptops. It is also increasingly used for physical access control in corporate and residential buildings, replacing traditional keys, fobs, or access cards with a contactless and more secure biometric credential.

The public's perception and acceptance of these applications are highly contextual. While the use of FRT for personal convenience, such as unlocking one's own device, is widely embraced, its application for mass surveillance in public spaces by government or commercial entities is met with significant opposition and concern. This dichotomy is critical for legal analysis under the GDPR, particularly for the balancing test required when relying on "legitimate interests" as a lawful basis. An organization's interest in using FRT must be weighed against the rights, freedoms, and reasonable expectations of individuals. The public's starkly different expectations of privacy in different contexts mean that a legitimate interest argument that might succeed for an office access system would almost certainly fail for a system that tracks shoppers in a public square.

The Legal Gauntlet: Lawful Processing of Facial Data

3.1 Navigating Article 6 and Article 9: The Search for a Lawful Basis

Any organization processing facial data under the GDPR's jurisdiction must first establish a valid lawful basis under Article 6. If the processing involves using biometric data for the purpose of unique identification, it is classified as special category data, and a second, more stringent condition from Article 9 must also be satisfied. Navigating these dual requirements presents a significant legal challenge for most FRT applications.

The Challenge of "Explicit Consent" (Article 9(2)(a)): For special category data, the GDPR requires "explicit consent," which is a higher standard than regular consent. It must be freely given, specific, informed, and unambiguous, typically confirmed through a clear written or oral statement.

In public surveillance scenarios, obtaining valid explicit consent is practically impossible. A controller cannot reasonably obtain a clear, affirmative agreement from every individual passing through a public space monitored by LFR.

In the employment context, the inherent power imbalance between an employer and an employee makes it difficult to argue that consent is "freely given." An employee may feel coerced into agreeing, fearing negative consequences for refusal. The UK Information Commissioner's Office (ICO) underscored this in its enforcement action against Serco Leisure, which used FRT for attendance monitoring. The ICO ruled the consent was invalid because employees were not offered a genuine alternative and were in a position of dependency.

The Narrow Path of "Substantial Public Interest" (Article 9(2)(g)): This condition permits the processing of special category data if it is necessary for reasons of substantial public interest and is based on Union or Member State law that is proportionate and includes specific safeguards for fundamental rights.

This is the primary legal basis invoked for law enforcement's use of FRT. However, its validity is contingent on the existence of a clear, precise, and foreseeable legal framework governing the technology's use. In the landmark UK case, R (Bridges) v South Wales Police, the Court of Appeal found the police force's use of LFR to be unlawful precisely because such a framework was absent, leaving too much discretion to individual officers.

Commercial entities face an even higher bar. The Spanish Data Protection Authority (AEPD) fined the supermarket chain Mercadona for using FRT to identify individuals with restraining orders, ruling that a commercial interest in preventing theft did not qualify as a "substantial public interest" sufficient to justify processing the biometric data of all customers.

Other Conditions: The remaining conditions under Article 9, such as protecting the "vital interests" of a person (e.g., in a medical emergency) or for the "establishment, exercise or defence of legal claims," are applicable only in very specific and narrow circumstances and cannot serve as a basis for the systematic or widespread deployment of FRT.

3.2 The Mandate for a Data Protection Impact Assessment (DPIA)

Under Article 35 of the GDPR, a Data Protection Impact Assessment (DPIA) is a mandatory prerequisite for any processing that is "likely to result in a high risk to the rights and freedoms of natural persons". The use of FRT almost invariably triggers this requirement. Regulatory guidance, such as that from the UK's ICO, explicitly lists large-scale processing of biometric data and the use of innovative technology like facial recognition as activities that demand a DPIA.

A DPIA is not a mere procedural checkbox; it is a substantive legal hurdle. It requires the data controller to conduct a rigorous and systematic analysis of the proposed processing. This assessment must include:

A detailed description of the processing operations and their purposes.

An assessment of the necessity and proportionality of the processing in relation to the purposes.

A thorough identification and assessment of the risks to the rights and freedoms of data subjects (including privacy, discrimination, and security risks).

A clear outline of the measures envisaged to address and mitigate those risks, including technical and organizational safeguards.

The critical importance of the DPIA is evident in recent enforcement actions and court rulings. In the Bridges case, the court found the police's DPIA to be deficient because it failed to adequately consider the violation of human rights and the impact on individuals not on the watchlist. Similarly, the Spanish DPA cited an "insufficient and incomplete" DPIA as a key failing in the Mercadona case. These cases demonstrate that a flawed DPIA can be a primary cause for an entire processing operation to be deemed unlawful, as it reveals a failure of the accountability principle from the very outset. The DPIA is the crucible where the controller must prove their compliance with the core GDPR principles of necessity, proportionality, and risk mitigation.

Across these legal challenges, a clear pattern emerges: the central battleground for the lawful use of FRT is the "necessity and proportionality" test. Regulators and courts consistently focus not on whether FRT can be used, but on whether its use is truly necessary and whether less privacy-invasive alternatives have been considered and exhausted. A mere assertion of improved efficiency or security is insufficient. The burden of proof lies squarely with the data controller to provide a robust, evidence-based justification for why FRT is the only viable and proportionate means to achieve a legitimate aim. This principle serves as the GDPR's ultimate brake on technological implementation for its own sake, demanding that data protection be an integral part of the design process, not an afterthought.

The Anatomy of Risk: Privacy, Bias, and Security

4.1 The End of Anonymity?: Mass Surveillance and Chilling Effects

The widespread deployment of FRT in publicly accessible spaces poses an unprecedented threat to individual anonymity. Unlike other forms of tracking that may require a device, a person's face is always exposed. This enables the creation of what critics have termed a "perpetual police line-up," where the movements, locations, and associations of any individual can be passively and persistently monitored without their knowledge or consent.

This capability for mass surveillance can create a profound "chilling effect" on fundamental freedoms. The knowledge or even the possibility of being monitored can discourage individuals from exercising their rights to free expression and peaceful assembly. People may become hesitant to attend political protests, visit sensitive locations like places of worship or health clinics, or associate with certain groups for fear of being misidentified, tracked, or having their presence recorded by authorities or corporations. Civil liberties organizations, such as the American Civil Liberties Union (ACLU), argue that this erosion of public anonymity is one of the gravest threats FRT poses to a free and democratic society. This concern is shared at the highest levels of European data protection regulation. The EDPB has explicitly stated that the remote biometric identification of individuals in public spaces entails mass surveillance, "does not have a place in a democratic society," and has consequently called for a ban on such uses.

4.2 The Ghost in the Machine: Algorithmic Bias and Discrimination

A significant and well-documented flaw in many FRT systems is algorithmic bias. Comprehensive studies by institutions like the U.S. National Institute of Standards and Technology (NIST) have consistently found that algorithms exhibit significant demographic disparities in their accuracy.

These systems frequently demonstrate higher false positive rates—incorrectly matching a person to an image in a database—for women, racial minorities (particularly individuals of Black and East Asian descent), children, and the elderly when compared to their performance on middle-aged white men. The error rate for darker-skinned women, in particular, can be orders of magnitude higher than for light-skinned men.

The primary root of this bias lies in the data used to train the algorithms. When machine learning models are trained on datasets that are not diverse and are predominantly composed of images of white males, they become less accurate at identifying the unique facial features of underrepresented demographic groups. This technical failing has severe real-world consequences. In the context of law enforcement, a biased false match can lead to a wrongful investigation, a false arrest, and potentially a wrongful conviction, with these harms disproportionately falling upon already marginalized communities. This creates a vicious cycle: historical over-policing leads to an over-representation of minority faces in mugshot databases ; these biased databases are then searched by biased algorithms, which increases the likelihood of a false match for individuals from those same communities. This, in turn, can lead to more police encounters and arrests, further populating the databases and reinforcing the cycle. The technology, in this way, does not just reflect existing societal biases but actively amplifies and entrenches them.

4.3 New Frontiers of Vulnerability: Data Security and Deepfakes

The security of FRT systems is a paramount concern due to the unique nature of biometric data. Unlike a password or a credit card number that can be changed or cancelled after a breach, a person's face is a permanent identifier. A data breach of a database containing facial templates creates an irreversible risk of identity theft, stalking, and harassment for every individual whose data is compromised. This permanence fundamentally alters the risk calculus for data controllers. The potential severity of harm from a breach is not temporary but perpetual, which elevates the standard of "appropriate technical and organisational measures" required under GDPR's Article 32 to an exceptionally high level.

FRT systems are also vulnerable to various forms of attack. "Spoofing" or presentation attacks involve an adversary using a static photo, a video, or a 3D mask of a legitimate user to fool the system. The increasing sophistication of "deepfakes"—hyper-realistic, AI-generated synthetic images and videos—presents a formidable threat. Research has demonstrated that many commercial "liveness detection" systems, which are designed to prevent spoofing by detecting signs of a live person (like blinking), can be successfully bypassed by deepfake attacks, undermining the security of authentication systems. Other emerging threats include "backdoor attacks," where a system can be compromised during the enrollment phase. By enrolling a single poisoned image (e.g., a person wearing a specific pair of glasses), an attacker can create a universal key that allows anyone wearing that same trigger to gain unauthorized access.

Regulation in Action: Enforcement, Jurisprudence, and Guidance

5.1 The Watchdogs' Verdict: Pan-European Enforcement

Data Protection Authorities across the EU have taken decisive and coordinated action against unlawful uses of FRT, establishing clear precedents for what constitutes non-compliance with the GDPR.

The Case of Clearview AI: The company Clearview AI, which built its business model on scraping billions of facial images from the public internet to create a searchable database for law enforcement, has faced a unified regulatory front.

The Italian Garante imposed a €20 million fine for processing data without a legal basis and violating core GDPR principles.

The French CNIL levied a €20 million fine for similar violations, adding a €5.2 million penalty for failure to comply with its initial order.

The Dutch DPA issued a €30.5 million fine for the creation of an illegal database of biometric codes.

The UK's ICO also fined the company £7.5 million for unlawfully processing the data of UK residents.

These consistent enforcement actions demonstrate a powerful, pan-European consensus: the act of scraping publicly available images to create a biometric database for a new purpose is fundamentally illegal under GDPR. It establishes that "publicly available" does not equate to "free to use for any purpose," especially for creating sensitive biometric templates.

Workplace Monitoring: The UK ICO's order against Serco Leisure to cease using FRT and fingerprint scanning for employee attendance monitoring is a landmark decision on the use of biometrics in the workplace. The regulator found the processing was not necessary or proportionate and, critically, that employee consent could not be considered freely given due to the inherent power imbalance in the employer-employee relationship.

Commercial Surveillance: The Spanish AEPD's €2.52 million fine against the supermarket chain Mercadona serves as a stark warning to commercial entities. The authority rejected the company's attempt to use FRT to identify individuals with criminal records, ruling that its commercial interest did not meet the high threshold of "substantial public interest" required to process special category data under Article 9.

5.2 Judicial Scrutiny: The Bridges Precedent

The judiciary has also played a crucial role in defining the legal boundaries for FRT. In the landmark UK case R (Bridges) v South Wales Police, the Court of Appeal ruled that the police force's use of live automated facial recognition (AFR) in public spaces was unlawful.

The court's decision was multifaceted. It found that the deployment violated the right to privacy under Article 8 of the European Convention on Human Rights (ECHR) because the existing legal framework was too vague. It granted excessive discretion to individual police officers to decide who should be placed on a watchlist and where the technology could be deployed. The ruling also identified clear breaches of UK data protection law, highlighting a deficient DPIA that failed to properly assess the human rights implications and the indiscriminate processing of data from thousands of non-targeted individuals. The

Bridges judgment established a vital precedent: even if a technology serves a legitimate aim, its use is unlawful unless governed by a sufficiently clear, precise, and safeguarded legal framework that constrains discretion and protects fundamental rights.

5.3 The EDPB's Guiding Hand: Setting the Tone for the EU

The European Data Protection Board (EDPB), which comprises all national DPAs, provides authoritative guidance on the interpretation of GDPR, setting a consistent tone for enforcement across the Union.

Law Enforcement Guidelines: In its guidelines on FRT use by law enforcement, the EDPB stressed that any processing of biometric data must be "strictly necessary" and authorized by a clear and precise law with robust safeguards. It explicitly rejected the notion that an image being publicly available means the biometric data derived from it has been "manifestly made public" by the data subject—a direct challenge to the logic of data scraping business models. The Board has also called for an outright ban on certain high-risk applications, most notably the use of remote biometric identification in public spaces for mass surveillance.

Airport Opinion (2024): In a forward-looking opinion on the use of FRT to streamline passenger flow at airports, the EDPB analyzed various data storage models. It concluded that only solutions where the passenger retains control over their own biometric template—such as storing it on a personal device or holding the sole encryption key to a centralized record—could be compatible with the GDPR principles of data protection by design and by default. Centralized storage models controlled by the airport or airline were deemed non-compliant. This opinion is not merely a reactive interpretation of the law; it is a proactive move to shape the future market for FRT. By rejecting insecure, centralized architectures, the EDPB is sending a powerful signal to technology developers that privacy-preserving, decentralized systems are the only viable path forward for commercial FRT applications in the EU.

VI. The Global Context and the Path Forward

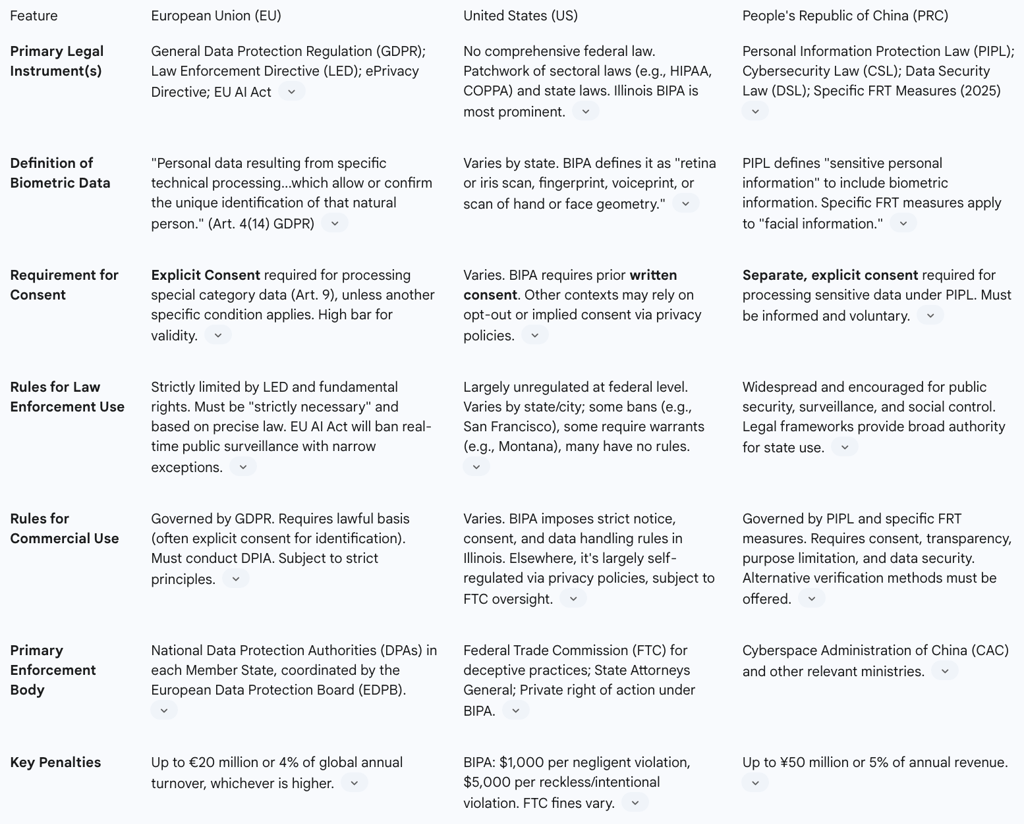

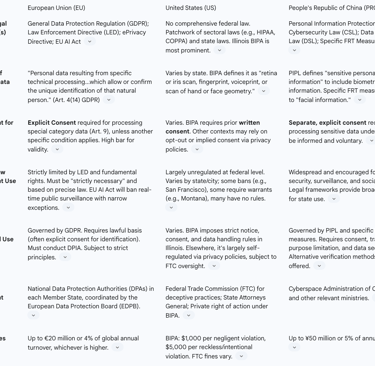

6.1 A Tale of Three Frameworks: EU vs. US vs. China

The global regulatory landscape for facial recognition technology is characterized by three distinct and divergent philosophical approaches, reflecting differing societal priorities regarding privacy, security, and commerce.

The European Union: The EU has adopted a comprehensive, rights-based model. Grounded in the GDPR and soon to be supplemented by the AI Act, this approach treats biometric data as inherently sensitive and requires a strong, justifiable legal basis for any processing. The framework prioritizes the fundamental rights of the individual, placing strict limitations on both state and commercial actors.

The United States: The U.S. employs a fragmented, sectoral approach, lacking an overarching federal law for FRT. Regulation is a patchwork of state-level laws and sector-specific rules. The Illinois Biometric Information Privacy Act (BIPA) is the most stringent, requiring written consent and providing a private right of action that has spurred significant litigation. Other states have enacted various measures, from outright bans on law enforcement use to warrant requirements, but many have no specific rules at all. Federal use is governed by disparate agency policies and constitutional law, not a unified statute.

China: China's framework is state-centric, designed to facilitate the broad use of FRT for public security, social governance, and economic efficiency. While laws like the Personal Information Protection Law (PIPL) and new FRT-specific measures impose consent and transparency requirements on commercial operators, they contain wide-ranging exceptions for national security and do not constrain government agencies. This enables the state to leverage FRT for pervasive surveillance and social control, as seen in the Social Credit System.

This divergence is creating a phenomenon known as the "Brussels Effect" in AI governance. The EU's dual-layered approach of regulating the data (via GDPR) and the technology itself (via the AI Act) establishes a high-water mark for a rights-respecting framework. As multinational technology companies prefer to engineer their products to a single, stringent global standard rather than navigate a complex and inconsistent patchwork of laws, they are likely to build their systems to be compliant with the EU model. In this way, EU regulations are poised to become the de facto global standard for democratic nations, shaping the design and deployment of FRT worldwide.

6.2 The Next Layer of Governance: The EU AI Act

The forthcoming EU AI Act will introduce a new layer of regulation that complements the GDPR. While the GDPR governs the processing of personal data, the AI Act will regulate AI systems, including FRT, as products placed on the EU market. It establishes a risk-based hierarchy:

Unacceptable Risk: The Act will outright prohibit certain uses of AI that are deemed to pose an unacceptable threat to fundamental rights. This includes social scoring by public authorities, emotion recognition in workplaces and schools, and, critically, the untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases—a direct legislative ban on the Clearview AI business model. It also bans real-time remote biometric identification in public spaces by law enforcement, subject to very narrow and strictly defined exceptions for serious crimes.

High Risk: Most other applications of FRT, such as for access control or as a safety component in products, will be classified as "high-risk." These systems will be subject to stringent obligations before they can be placed on the market, including rigorous risk management, high-quality data governance, transparency for users, mandatory human oversight, and high levels of accuracy and cybersecurity.

6.3 Future-Proofing Compliance: Emerging Technologies and Norms

The technological landscape of FRT is evolving in ways that may facilitate, rather than hinder, GDPR compliance.

Technological Advancements: Future innovations are focused on improving accuracy and security. These include 3D facial authentication, which captures the depth and contour of a face to provide more accurate results and resist spoofing from 2D images, and multi-modal biometrics, which combine facial scans with other identifiers like voice or iris patterns for enhanced security. Enhanced "liveness detection" is also being developed to better counter sophisticated deepfake attacks.

Edge Computing: Perhaps the most significant trend is the shift towards edge computing. This involves performing biometric processing and storing the template locally on a user's device (e.g., a smartphone or a smart camera at a doorway) rather than transmitting data to a centralized cloud server. This architectural shift is a direct response to the legal and societal pressures articulated by GDPR and regulators like the EDPB. By keeping the biometric template on the user's device, edge computing inherently aligns with data minimisation (only the user's data is stored), enhances security (eliminating a central "honeypot" of data), and empowers individual control. This is a powerful example of how strong, rights-based regulation can steer technological innovation towards more privacy-preserving outcomes.

Evolving Societal Norms: Public opinion continues to shape the future of FRT. While convenience is valued, growing awareness of the risks of surveillance and bias is driving demands for greater transparency, accountability, and individual control over one's own biometric data.

Conclusion and Recommendations: Striking the Balance

7.1 Synthesizing the Challenge: A Paradigm Clash

The analysis presented in this report reveals a fundamental paradigm clash. Facial Recognition Technology, in its most powerful forms, is a tool of mass data collection and identification. The GDPR, conversely, is a legal framework built upon the foundational principles of data minimisation, purpose limitation, and individual control. The evidence from regulatory enforcement, judicial rulings, and authoritative guidance demonstrates that a "business as usual" approach to deploying FRT—one that prioritizes technological capability over fundamental rights—is legally and ethically untenable in the European Union. The consistent message from authorities is clear: organizations that fail to rigorously demonstrate the necessity, proportionality, and robust safeguarding of their FRT systems will face significant legal and financial consequences.

7.2 A Roadmap for Responsible Innovation

To navigate this complex landscape, stakeholders must adopt a proactive, rights-respecting approach. The following recommendations provide a roadmap for policymakers, technology developers, and organizations deploying FRT.

For Policymakers and Regulators:

Provide Clear Legal Frameworks: Following the precedent set in the Bridges case, Member States must establish clear, precise, and foreseeable laws governing the use of FRT by public authorities, particularly law enforcement. These frameworks must strictly define permissible purposes, limit discretion, and mandate robust safeguards.

Promote International Standards: Work with international bodies like NIST to develop and adopt global standards for FRT accuracy, bias testing, and security to ensure a baseline of technical reliability and fairness.

Foster Public Debate and Education: Actively engage the public in a democratic debate about the societal trade-offs of FRT. Public education campaigns can foster an informed dialogue about where the lines of acceptable use should be drawn.

For Technology Developers (The Supply Side):

Embed Privacy by Design: Make GDPR's Article 25, "Data Protection by Design and by Default," a core engineering principle. Prioritize the development of privacy-preserving architectures, such as on-device processing and edge computing, which align with regulatory guidance and minimize data exposure.

Aggressively Mitigate Bias: Invest significant resources in curating diverse, representative, and ethically sourced training datasets. Conduct regular, transparent audits of algorithmic performance across all demographic groups and publish the results to demonstrate a commitment to fairness and accuracy.

Empower Downstream Compliance: Provide data controllers with transparent and comprehensive documentation on the technology's capabilities, limitations, and potential risks. Build granular controls into the software that allow controllers to easily configure the system in a way that is compliant with principles like data minimisation and storage limitation.

For Data Controllers (The Demand Side):

Treat the DPIA as Foundational: The DPIA must be the first step in any FRT project, not a compliance afterthought. It should be a rigorous, candid, and comprehensive assessment of the necessity, proportionality, and risks to fundamental rights. If less intrusive alternatives can achieve the purpose, they must be used.

Scrutinize the Lawful Basis: Do not default to "consent" as a legal basis, particularly in situations involving a power imbalance (e.g., employment) or in public spaces. If relying on "substantial public interest," ensure it is genuinely applicable and supported by a specific, robust legal framework.

Champion Radical Transparency: Use clear, simple, and layered notices to inform individuals whenever FRT is in use. This information must explain the specific purpose of the processing, the legal basis, data retention periods, and the individual's rights under GDPR, including how to exercise them and how to opt-out where an alternative is available.

Mandate Meaningful Human Oversight: For any FRT system whose output could have a legal or otherwise significant effect on an individual, ensure that the final decision is always made by a trained human operator. This is not only a best practice for mitigating bias but a crucial safeguard for complying with GDPR's Article 22 on automated decision-making.